<This white paper is only half way developed. It was abandoned when there was no concensus possible surrounding the definition of an Internet Data Center.>

Internet Data centers provide a secure, high-capacity environment for communications companies and content providers to locate and host their Internet equipment . These centers provide the foundation for a variety of business models and Internet operations. The build versus buy decision for Internet Data centers is an important and multifaceted one; the issues spans technical, financial, and business domains. The expertise involved in building and operating a highly secure and network-focused facility are non-trivial. Even the low-end data center requires both an immediate cash draw and a recurring operations cost. The construction project itself requires months of planning and execution. Like most things, the first time one endeavors into a new area, potentially costly mistakes are made. Economies of scale can be realized and exploited only in large data centers. These issues must be contrasted with the desire for control over design and operation of the data center.

This paper explores the tradeoffs between building a data center and outsourcing the data center to a third party. The scope of this research is limited to the common “core infrastructure”, that is, the systems that all Internet data centers require regardless of business model. We will explicitly not be discussing web design, construction, hosting, monitoring, or management and operations services. These activities are required regardless of whether or not the Internet data center is outsourced or not. We are focusing this research solely on the decision rule: when does it make sense to build your own data center and when does it make sense to outsource it?

The author has toured over thirty Internet data centers around the world and combines this experience with interviews of industry experts to highlight the most significant tradeoffs. This paper presents a few summary graphs and tables that provide a current perspective on this decision. In the appendix is a vast list of on-line Internet Datacenter Resources.

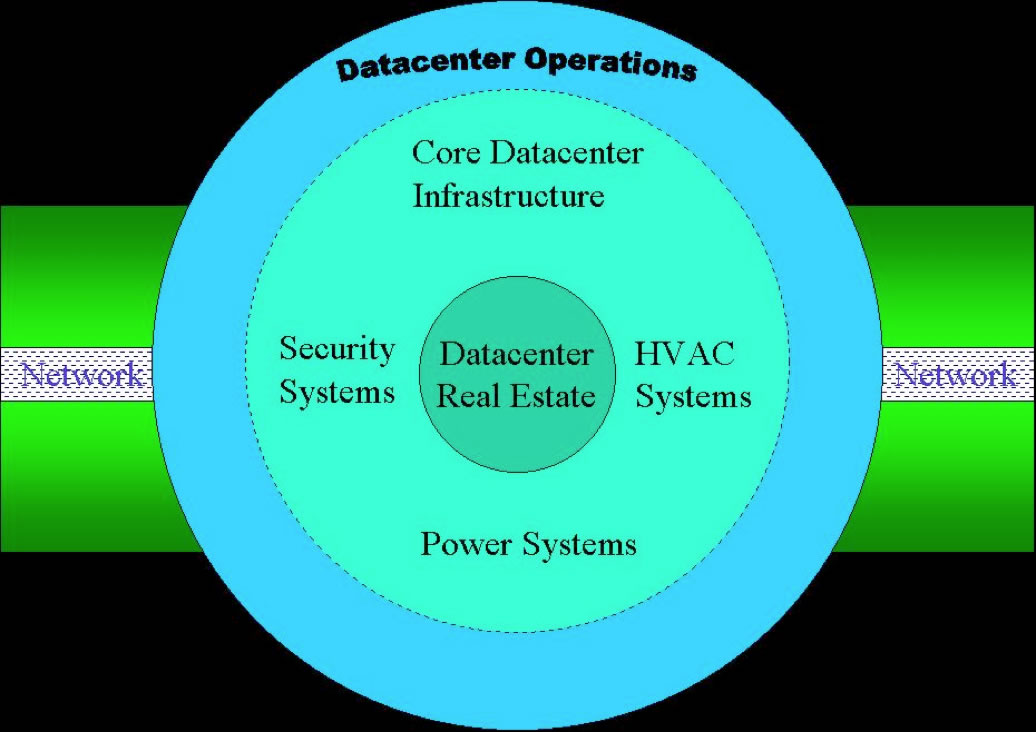

All Internet Data centers include some form of the following core systems:

All Internet Data Center operators build their business models based upon these first three core systems. It is in this common area that we focus our build vs buy comparison.

Figure 1 - Anatomy of the Internet Data center

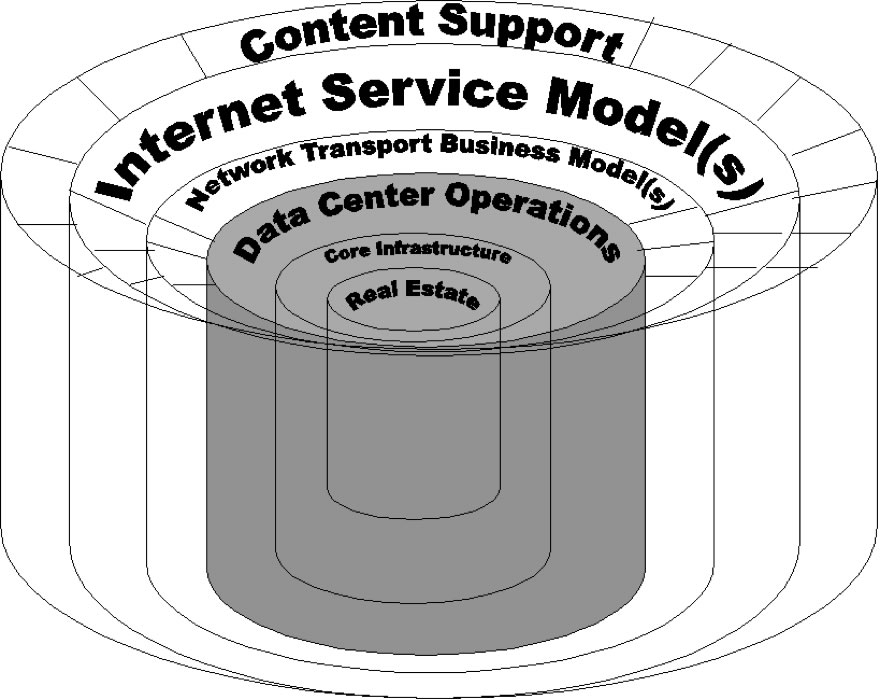

On top of this Internet Data Center model, Internet Telecommunications companies (telephone companies, Internet Service Providers, Content Distributors, Content Hosting companies, etc.) operate their network and systems equipment in configurations suitable for their business models. In this way (see figure 2 below) the company (or companies) can add value and build reliable dependent systems within the conditioned environment.

Figure 2 - Layering of Network and Content on top of the Data Center Model

The reason that the model is evolved in this way is to facilitate an apples to apples comparisons for the build vs. buy decision. Since so many Internet Data Center companies are bundling varying professional services and requiring the use of multiple of those services, it would be difficult to compare in a generic fashion their offerings against a build decision. Since all of these business models require the core Internet Data Center model as described above, we can isolate the core “rings” of the model for the build vs. buy comparison.

We will next describe these core systems required for both build and buy scenarios.

Sources and Notes ...

Definition taken from Communication Service Provider, December 1999, US Bancorp Piper Jaffrey Equity Research.

Exodus Communications Q1 2000 Quarterly Earnings Announcement 4/20/2000 2:00 PM Pacific Time: http://webevents.broadcast.com/financecalls/event/index.asp?EarningsID=766&src=StreetFusion where Ellen Hancock describes the Exodus requirement for customers to purchase 2 of 3 (Space, bandwidth and professional services. Abstracted from the Equinix IBX Facilities Design Site Selection Criteria document, Rev. 2, John Pedro. Conversation with Jay Adelson (CTO Equinix) regarding obtaining government support from the Loudon County officials.

At the core of all Internet data centers is real estate in which to place the network and systems equipment. Selection of location is critical for Internet Data Centers since it has a direct impact on the cost of the facility and an indirect impact on the cost of the Infrastructure, Operations and Network.

The top six criteria for site selection :

1. Proximity to Fiber – ideal sites will be within a couple miles from dark fiber and telephone company Points of Presences.

2. Space Size, configuration, expandability – sites must be large enough to support the intended operation, with floor loading sufficient for the equipment expected. Shared Internet Data Center operators must build to the worst case scenario, while the build decision folks may know in advance what equipment will be placed in the space. There are many other issues here having to do with fitness for purpose including weather proofing (earthquakes, hurricanes, floods, etc.).

3. Utility Power Availability – Dual grid feeds are desirable, but the requirement for 3,000 Amps at 480 volts per 50,000 are typically required for operations.

4. Facilities Yard – Requirement for space at ground level or on the roof for fuel tank(s), generators, chillers, etc. Space required varies but requires at least 5200 square feet for the first 50,000 square feet of facility.

5. Local Government Support – These include tax abatements, permitting, right of way, power, fiber issues requiring government interactions including architectural review boards. In some cases, the number of parking spaces, off-ramp development, signage, etc. issues are uncovered only at a very late stage of real estate selection . Another example is Terramark’s building of the Technology of the Americas Building. They constructed a six-floor building in a Federal Empowerment Zone in downtown Miami, and along with other government programs received several million dollars in support.

6. Locality to customer and support staff. Since these data centers will ultimately be used by customers and support engineers, it is important that local talent is available. Building a data center in the middle of nowhere would make it difficult to attract and retain folks to support the equipment and infrastructure in such a building .

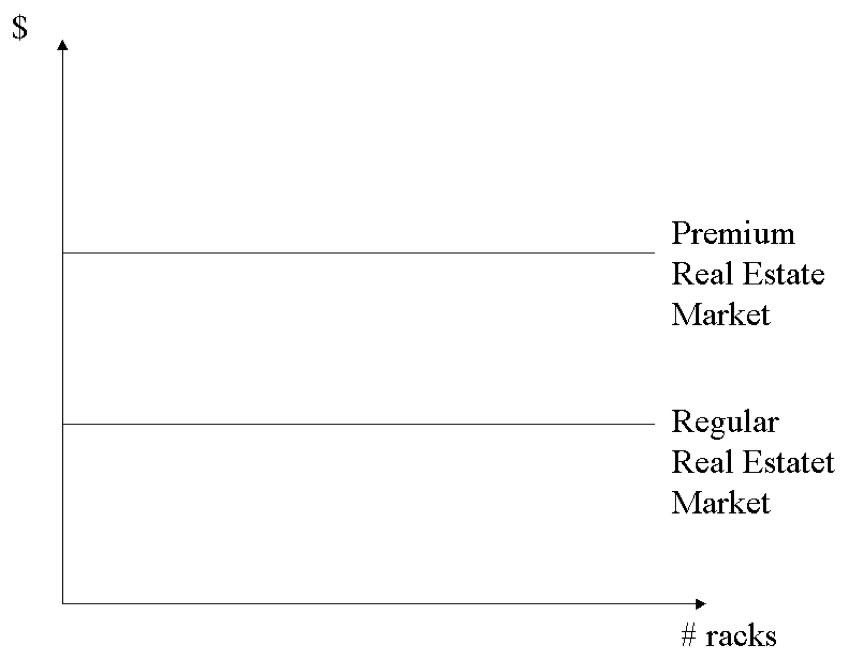

Figure 3 - Cost per Real Estate in Premium markets

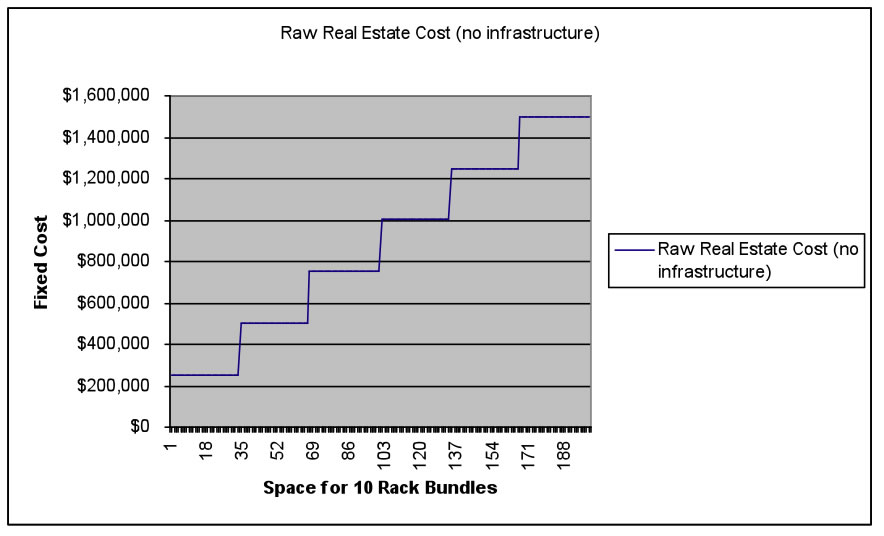

For the financial models, we assumed a norm in real estate costs of $25/sq foot allocated in 10,000 sq foot chunks. See the scaling graph below. Those choosing the build option will need to choose the size of the data center in advance and/or purchase an option on adjacent space. While the buy option typically requires a one-year contract, the build option typically requires ten or more years commitment. This is not a bad thing; the build decision requires investing a large amount of money to fit up the building with the required infrastructure. You do not want to get kicked out.

Figure 4 - Fixed Cost of real estate allocated in 10K sq ft chunks scale rughly linearly

There are many criteria to be evaluated with respect to suitability of real estate. For example, all things considered equal, if there is sufficient lead time, most prefer Greenfield (brand new) buildings over existing buildings. Time to market may dictate retrofitting existing facilities to meet seismic standards, appropriate bracing for floor loading, drainage systems, etc. Demolition and retrofitting often can save valuable time to market.

Hidden charges and unexpected delays can impact deployment. For example, some telco hotels require interconnection of its tenant via risers to “meet me” rooms with unpredictable expenses and typically no Service Level Agreements on the speed at which connections are made.

When considering the buy decision it is important to assure there is sufficient space available for expansion and sufficient time remaining on the lease.

Among the core systems, Real Estate cost is the most variable and may have the largest overall impact on the cost of the facility.

It should be mentioned that the buy decision allows for great flexibility. For redundancy, at least two data centers are required for most mission critical operations. By leasing services, companies can achieve even greater redundancy by leasing across multiple shared facilities perhaps operated by different companies using different technologies. Further, content distribution technologies can be applied to move content to each continent to ultimately improve the end user experience. This flexibility is not inherent if you build.

Large Data Centers. According to Roy Earle, for shared Internet Data Centers there is a trend away from traditional telco hotels (typically 20,000 sq ft) toward large scale warehouse facilities (on the order of 300,000 sq ft) for collocated equipment. While this allows scaling of infrastructure, it also dramatically reduces the choice for sites. This ailment is not suffered by the data center build choice since smaller amounts of space is easier to come by. This effect is compounded by the fact that large warehouses suitable for data centers are typically outside of the major metropolitan areas and therefore likely far away from telco and fiber provider Points of Presence.

Sean Donelan made one other important point about the cost of choosing real estate badly:

“You can save a lot of money choosing the right site, but you can spend a lot of money on the site trying to upgrade it to the appropriate standards. Many of the most expensive problems to fix at the end of the build are due to the selection of Real Estate at the beginning of the build.”

Sources and Notes ...

Conversation with Sean Donelan regarding the importance of location. The popularity (and high rent) of 1 Wilshire in Los Angeles, 60 Hudson in New York City are prime examples. Interview with Art Chinn (colo.com): landlords in key cities in Europe are realizing the value of their real estate and adjusting prices accordingly. According to Sean Donelan, Real Estate varies more on length of lease than on amount of space used. According to Sean Donelan, “Real Estate prices in New York City, Tokyo and London are typically twice the cost of anywhere else.”

“While today's information technology is incredibly reliable, computer systems still contain electronic circuitry that is highly sensitive to environmental conditions—for example, temperature, humidity, dust, and "dirty" power. And larger events, such as power outages and natural disasters, pose an even larger threat to your systems and data. Seemingly unnoticeable environmental, design, and structural elements can render your core systems inoperable in a heartbeat. ”

Common threads surrounding the build versus buy decision include core competence and expertise; should the company focus on its core competency, that which it does exceptionally well, and does the firm have the prerequisite expertise to execute? Next we’ll broadly describe each system and some issues in the implementation of the systems.

Security includes all systems protecting the physical assets in the Internet Data Center. This includes perimeter and in-building access control and monitoring systems as well as physical add-ins like bulletproof glass and perimeter walls.

There is a key difference between outsourced data centers and those built and operated in-house. Shared Internet Data center Security Systems must handle the added complexity of multiple customer access. Solutions range from escorted access and supervised use to free (zoned) access . This complexity may not be required if built solely for the use of one company. The common functionality is to keep non-authorized staff from the networked equipment.

The cost and scaling of the security depends more upon the application of the data center than on the scale of the center. For example, a small section of a building that is essential to the operation (like the cashier office in a casino) may be equipped with armed guards, many survellience cameras, etc. Likewise a shared facility may require more participant cages and authentication than a single user facility.

Having said all of that, economies of scale exist that allow the expense of circulating gaurds, bullet proof glass and Kevlar perimeter protection, that a smaller facility simply can’t afford.

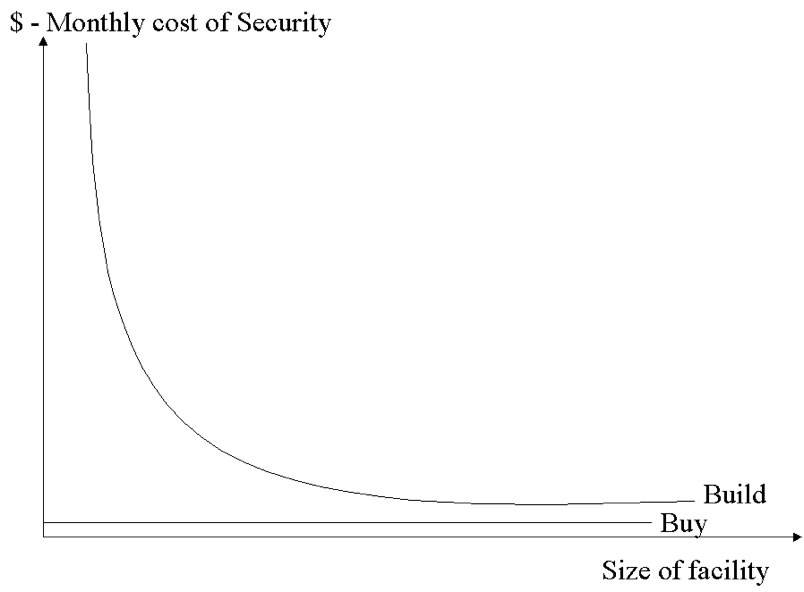

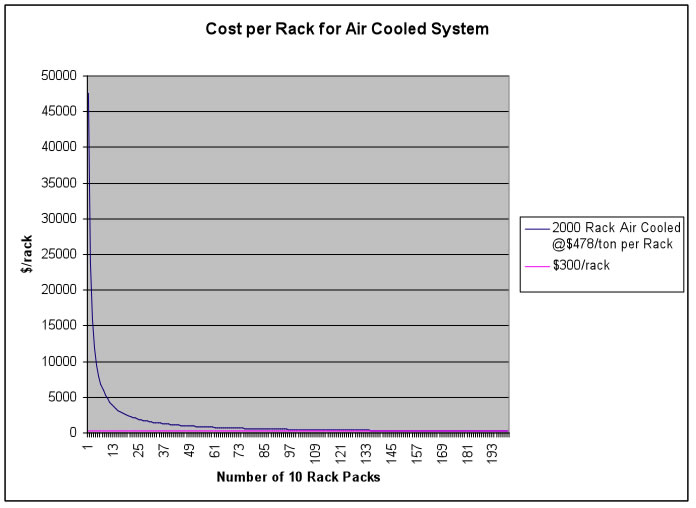

The cost function shown below shows the financial build versus buy cost function. By sharing conditioned space (already complete with security systems) the cost is shared proportionately across all participants. As a result, the cost of security is fixed on a per rack basis. Conversely, building requires purchasing the entire security system and allocating the cost across the number of racks the company uses.

Figure 5 - Cost function of Security Systems

It was pointed out that customers tend to demand more security from a shared facility than they would require from their own facility simply because the collective money can afford that which they can not individually afford. For example, integrated environmental (temperature, humidity, electric draw) and security (surveillance cameras on both sides of racks, cage access log) information feeds may be required since the cost per rack is relatively low. The end result is a shared environment with greater than required security systems .

In the build case, restricting access to company employees (perhaps ) reduces the access concerns and reduces the need for cages and therefore allows a higher rack density. Security can be built to company specifications, where buying may require accepting a level of security that is inappropriate for the application .

One practical implication of the shared data center security is access. When equipment must be repaired during off hours, some Internet Data Centers (particularly telephone company facilities) do not make it easy to gain access to the failed equipment . Others require escorts to continually monitor activities in “

Sources and Notes ...

Discussion with Sean Donelan. A company may be willing to accept a greater portion of the risk of unauthorized access by its own employees. Conversation with Sean Donelan. France Telecom, the Vienna Internet Exchange (VIX), the NSPIXP Japanese exchange require advanced notice to access facilities and provide no 24/7 on-site assistance. A partial solution is deployed out-of-band equipment. Interviews with ISPs mentioned this explicit deficiency at the PAIX facility. It should be noted that this problem is lessened when a customer is escorted to his own cage.

Heating, Venting and Air Conditioning (HVAC) systems are critical for maintaining an operations environment for networking and computing equipment. According to IBM, computing machinery operates best between 18° C (65° F) and 29° C (85° F) with humidity between 20% and 62% relative humidity. Too much humidity can cause system failure. Too little humidity generates static electricity. IBM recommends an optimal temperature of 24° C (76° F) and 45% relative humidity.

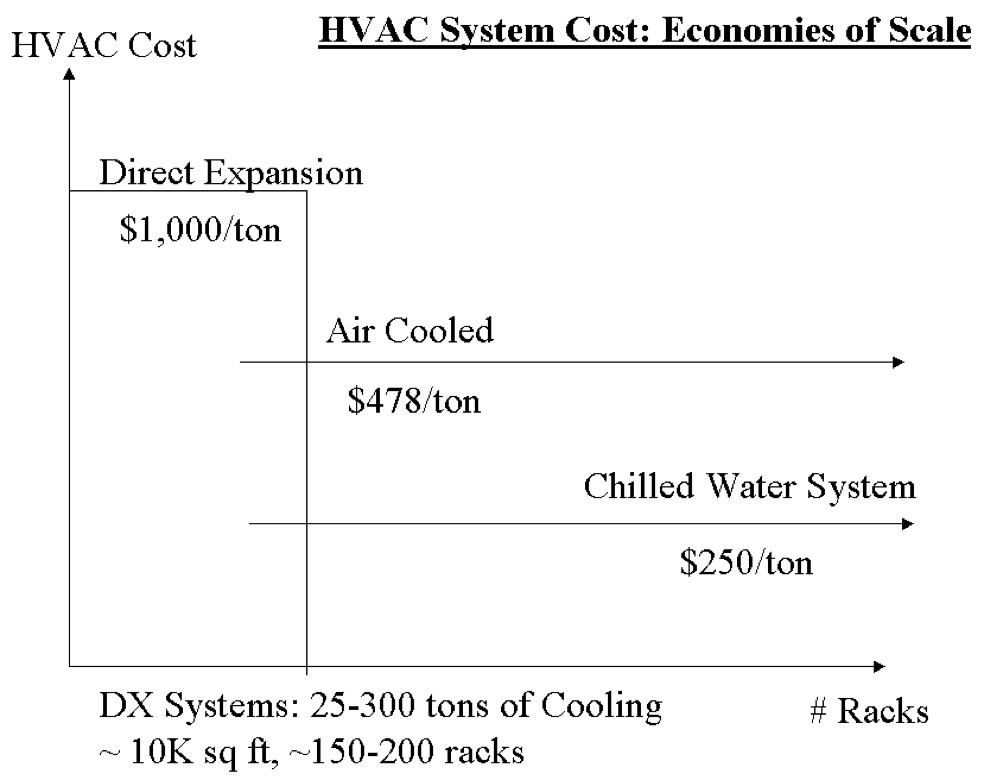

HVAC infrastructure provides this conditioning and benefits from economies of scale. Large scale Internet Data centers can implement high cost and high efficiency HVAC systems and share the costs across a potentially large number of customers. These larger systems have the added benefits of requiring fewer units to perform the same task, resulting in higher Mean Time Between Failures and lower Mean Time To Repair .

For the financial analysis, assumptions include that the Internet Data Center has no windows, is well insolated in the walls and ceiling, has no office space, and operates in a lights out environment.

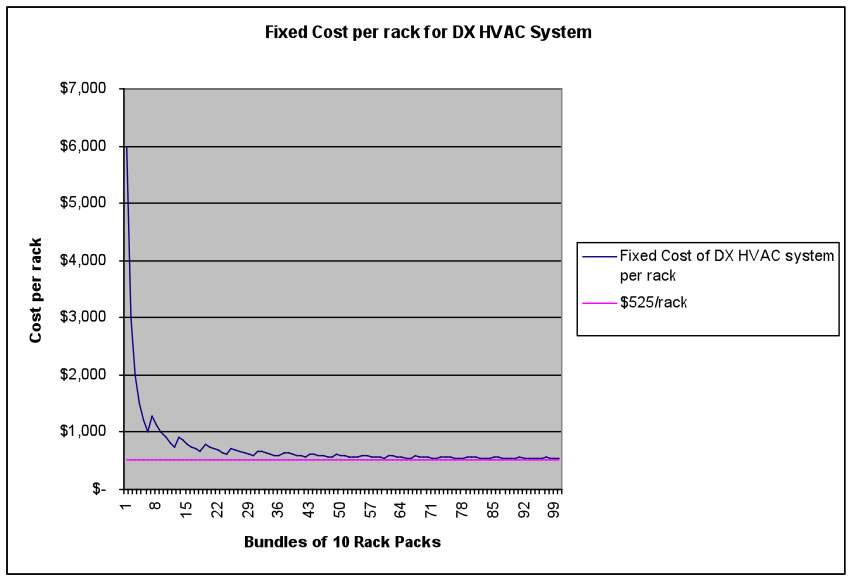

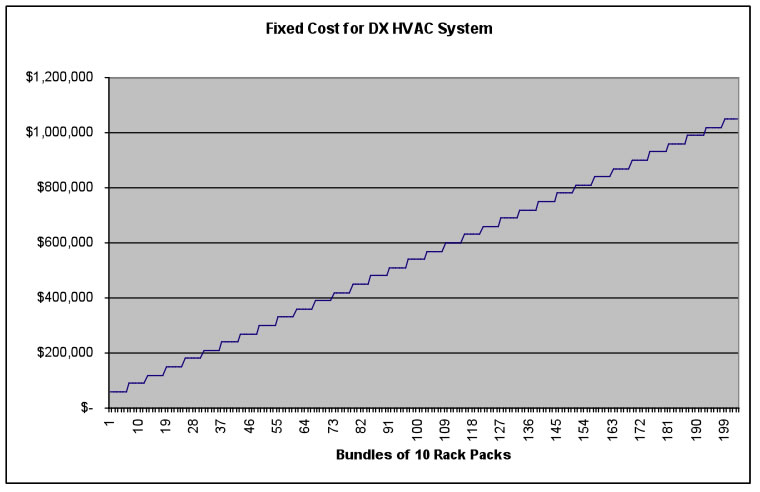

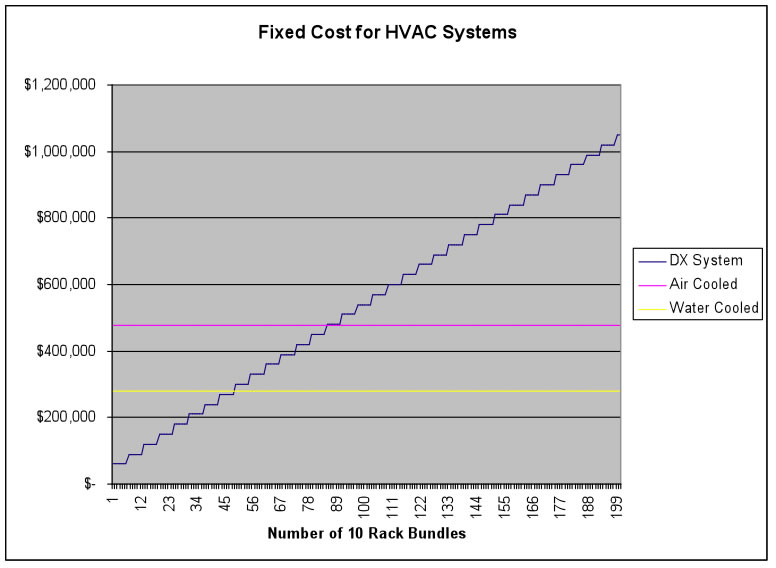

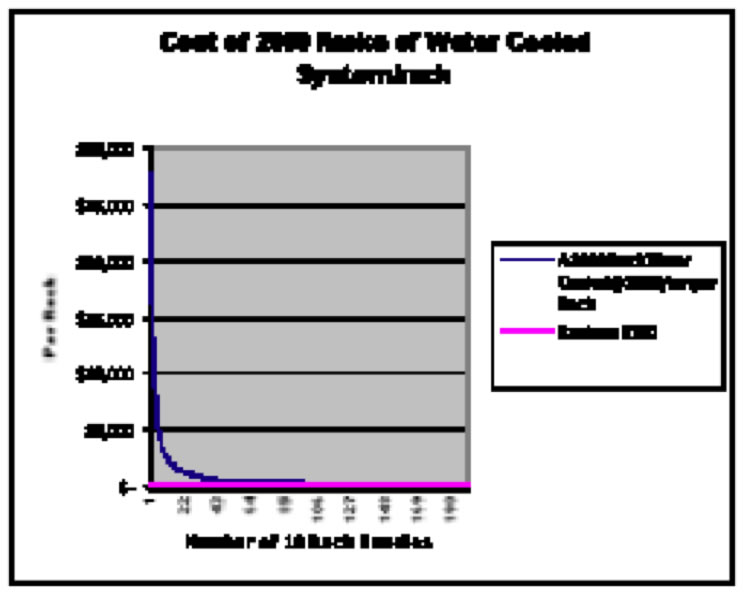

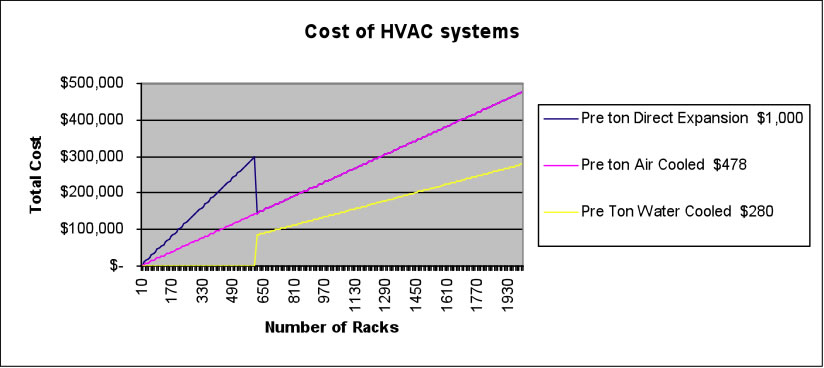

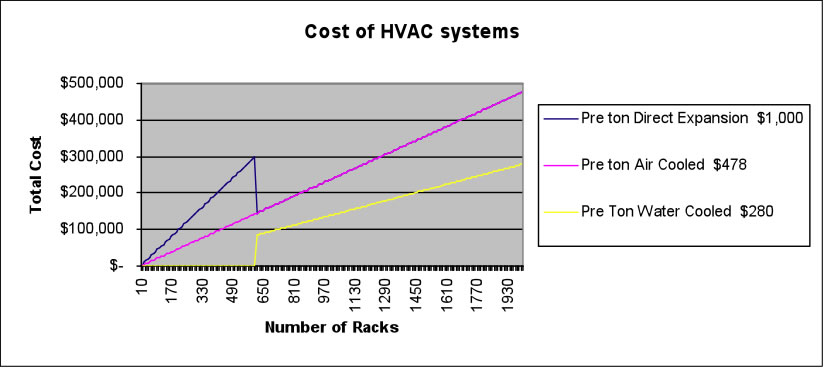

Figure 6 - Cost of operating various HVAC Systems

Figure 7 - Out of Pocket costs for HVAC Systems

Figure 8 - Cross over point at about 500 Racks where Water Cooled is Cheapest Per Rack Solution

Figure 8 - Cross over point at about 500 Racks where Water Cooled is Cheapest Per Rack Solution

Figure 9 - Practical domain of operations

From a practical perspective, DX systems can not be supported beyond 10 units. The fans must be continually checked and repaired, providing an undue maintenance issue beyond xx racks. Likewise, air cooled systems operate most effectively where 25-300 tons of cooling are required.

The build versus buy decision would therefore need to take into account the scale of operations and the comparative fixed and recurring HVAC costs.

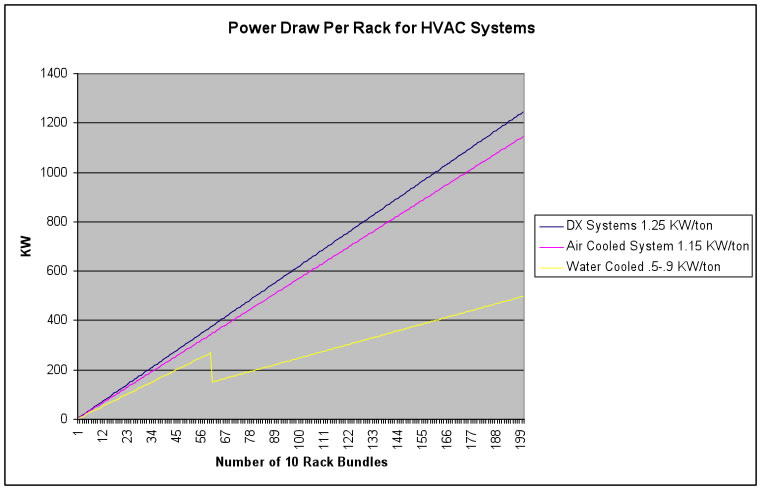

The HVAC selection impacts the power requirements as well. Air cooled systems are more efficient than DX systems and water cooled systems operate more efficiently still under load .

For these reasons, economies of scale are realized for large Internet Data Centers that are not realized in the build decision.

So where is the break even point for the HVAC systems alone?

Sources and Notes ... Data for these graphs derived from interviews with Chris Countryman, Carrier Systems. Note that water systems under light load are less efficient than under load. The graph is not jagged as indicated, but this effect needed to be noted.

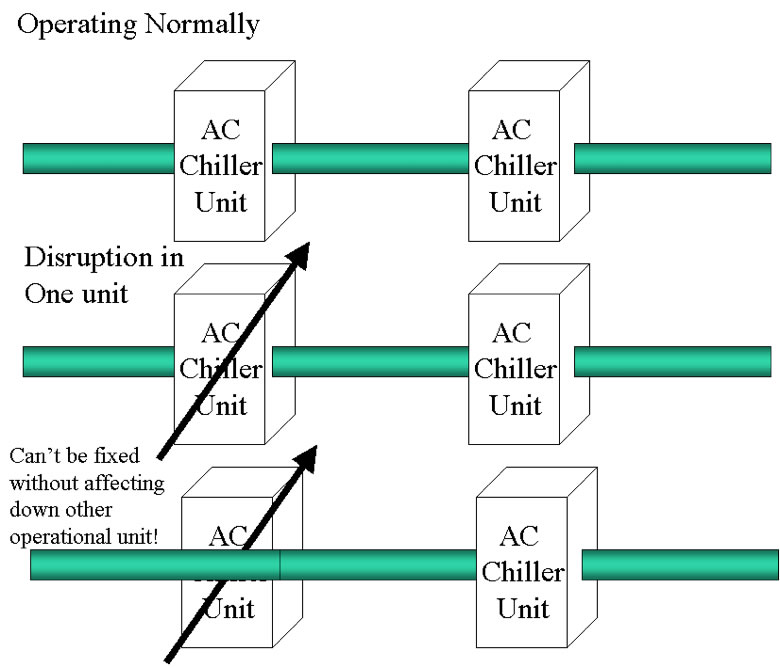

All modern data centers have at least N+1 redundancy on these core infrastructure systems but the design of the N+1 systems require careful evaluation of each an every failure scenario. Consider the following N+1 “redundant” HVAC system below. While each chiller can support the entire operation, reparation of the broken unit may require a disruption in service to the operational unit.

While it may seem obvious that each system must undergo these fault analysis, in the field this represents a challenge as plans change during the construction process. Well established data center companies typically don’t make these mistakes as the experience curve kicks in. Diligence must be applied when evaluating outsourced and in-house constructed data centers to ensure that any single system component can be repaired without disrupting operations.

The entire system economies of scale kick in for larger facilities that can allocate the incremental cost of the N+1 unit across a larger value of N.

Another factor kicks in when looking at the evolution approach of Data Center Design. If one builds a data center incrementally (starting with the minimal power systems) and adds components (generators for example) incrementally, it is possible to expand the system such that the N+1will fail since the ‘N’ component that fails could be a larger component than the set of smaller components.

Sources and Notes ...

So what does the conditioned real estate cost function look like? If we remove extreme location expenses from the equation (they can be easily added back to the financial models later) and assume that both facilities are of same scale and cost ($400/sq ft), and assume that the network access can be brought in, we end up with a graph like the typical buy vs. lease graph: Summary Graph: Build vs. Buy = Buy vs. Lease From this graph we see that if we assume that both the build and buy decisions build to a scale where the average cost per square foot of $400/sq foot , the breakeven point is about 60 racks. That is, if the unit costs are identical and the lease price given to you is $1500/rack/month, then you will be indifferent between building your own data center and purchasing those services when you require 60 racks of conditioned operations space. Given the assumptions below, you will prefer to outsource if you expect to use less than 60 racks, and consider building your own if you require more than 60 racks.

Assuming these best case scenerio, the question comes down to core competency; what business do you want to be in and where do you want to build your expertise. Typically, companies that build their own Internet Data Centers don’t build one, but build at least two facilities for redundancy. The load on an organization for building two data centers is greater than twice the burden, due to the need to interconnect the two, synch systems, etc. To continue along this line, companies that rationally build two facilities often build many more (order twn or more) for resale and build the staff to do so. Building a department of real estate, architecture, design, engineering, etc. fundamentally shift the focus of all but the very large organizations.

The other key issue that comes to play is the learning curve. All companies make mistakes and overlook underlying assumptions when building the first few data centers. Common snafus include real estate selection that significantly delays the installation and activation of fiber. Trenching and lighting fiber takes time and energy from companies that are already heavily oversubscribed .

Other mistakes overcome by the learning curve include issues with real estate. Multi-floor telco hotel landlords may inaccurately characterize floor loading capabilities; if not checked, the issue comes up during permitting, and can yield substantial delay while adding support or substantially reduce the rack density. Landlords have been known to change the rules regarding the interconnection among the tenants; in one case the landlord changed the rules regarding the use of the risers (conduits through whihch inter-floor cabling is done) requiring the use of a shared “meet me” room, at additional cost. These issues, unknown to the first time builders, will ad delay and cost to the first few Internet Data Centers . These and other snags are inevitable.

Key assumptions include:

Sources and Notes ...

A major assumption since we have shown the economies of scale that the shared data center operator can realize that the single use builder can not. Case either experienced by the author or heard directly from the source. Cases either experienced by the author or heard directly from the source.

The references I used for this document were the folks I spoke with and the following valuable reference materials:

Search for Internet Data Center on google.com:

The subset of Internet Data Centers and Internet Exchange Environments I have toured:

Internet Transit Pricing Historical and Projections

Index of other white papers on peering

WIlliam B. Norton is the author of The Internet Peering Playbook: Connecting to the Core of the Internet, a highly sought after public speaker, and an international recognized expert on Internet Peering. He is currently employed as the Chief Strategy Officer and VP of Business Development for IIX, a peering solutions provider. He also maintains his position as Executive Director for DrPeering.net, a leading Internet Peering portal. With over twenty years of experience in the Internet operations arena, Mr. Norton focuses his attention on sharing his knowledge with the broader community in the form of presentations, Internet white papers, and most recently, in book form.

From 1998-2008, Mr. Norton’s title was Co-Founder and Chief Technical Liaison for Equinix, a global Internet data center and colocation provider. From startup to IPO and until 2008 when the market cap for Equinix was $3.6B, Mr. Norton spent 90% of his time working closely with the peering coordinator community. As an established thought leader, his focus was on building a critical mass of carriers, ISPs and content providers. At the same time, he documented the core values that Internet Peering provides, specifically, the Peering Break-Even Point and Estimating the Value of an Internet Exchange.

To this end, he created the white paper process, identifying interesting and important Internet Peering operations topics, and documenting what he learned from the peering community. He published and presented his research white papers in over 100 international operations and research forums. These activities helped establish the relationships necessary to attract the set of Tier 1 ISPs, Tier 2 ISPs, Cable Companies, and Content Providers necessary for a healthy Internet Exchange Point ecosystem.

Mr. Norton developed the first business plan for the North American Network Operator's Group (NANOG), the Operations forum for the North American Internet. He was chair of NANOG from May 1995 to June 1998 and was elected to the first NANOG Steering Committee post-NANOG revolution.

William B. Norton received his Computer Science degree from State University of New York Potsdam in 1986 and his MBA from the Michigan Business School in 1998.

Read his monthly newsletter: http://Ask.DrPeering.net or e-mail: wbn (at) TheCoreOfTheInter (dot) net

Click here for Industry Leadership and a reference list of public speaking engagements and here for a complete list of authored documents

The Peering White Papers are based on conversations with hundreds of Peering Coordinators and have gone through a validation process involving walking through the papers with hundreds of Peering Coordinators in public and private sessions.

While the price points cited in these papers are volatile and therefore out-of-date almost immediately, the definitions, the concepts and the logic remains valid.

If you have questions or comments, or would like a walk through any of the paper, please feel free to send email to consultants at DrPeering dot net

Please provide us with feedback on this white paper. Did you find it helpful? Were there errors or suggestions? Please tell us what you think using the form below.

Contact us by calling +1.650-614-5135 or sending e-mail to info (at) DrPeering.net